AI Should Make People Better, Not Dumber

How relational intelligence turns transactions into trust

A stance, not a slogan

We believe AI should leave people better than it found them — clearer, calmer, more confident.

Not more dependent. Not more overwhelmed. Not more passive.

That belief is easy to say and hard to ship. It forces a design choice at every turn: do we optimize for speed alone, or for human outcomes? Do we take control, or help the person keep it?

Relational intelligence is our answer. It’s the discipline of building AI that treats understanding, autonomy, and trust as first-class features — because that’s what makes people better.

The hidden cost of cognitive noise

Picture a familiar moment. You’re choosing sneakers. You want something that matches your style and values — not just your size.

You type a simple question. Two possible responses:

These run true to size. Clean lines. Durable leather. If you like minimalist fits, these will feel right.

vs

Engineered with precision sizing, streamlined architectural lines, and premium full-grain leather for lasting wear, this silhouette embodies elevated minimalism tailored for the discerning modern wardrobe.

One invites you forward. The other makes you feel small. When AI piles on jargon, length, and uncertainty, people stall out. Confidence drops. The tab closes. The relationship ends before it begins. Clarity isn’t a tone preference. It’s a conversion lever and a trust signal.

What we mean by relational intelligence

Relational intelligence (RI) is the ability for AI to understand context, adapt in the moment, and respond in ways that protect a person’s autonomy while strengthening the connection.

IQ provides the thinking tools: planning, retrieval, comparison, reasoning. Most providers concentrate here and raise a lot of value by making models faster and more capable.

EQ provides the emotional tools: tone, timing, and the skill to de-escalate or encourage. We invest here so the system can stay human in its language and pace.

RI is where we excel and pioneer: It is IQ and EQ put to work in real conversations: deciding when to ask versus answer, how many options to present, how to explain trade-offs, and when to step back so the person stays in control.

RI shows up in practical choices that keep momentum without taking the wheel: proposing the next helpful step, offering real options with crisp reasons, summarizing and checking for fit before moving on, reflecting someone’s language and pace without copying them, adapting detail to their appetite, being honest about trade-offs, and knowing when to stop or escalate.

Without RI, conversations feel mechanical and people feel processed. With RI, people finish clearer and more in control — and they come back.

We live in a relationship economy

We are living in what many researchers and business thinkers call the relationship economy —an era where the value of connections often outweighs the value of products or services alone. Price matters. Product matters. But trust, authenticity, and emotional resonance decide whether people come back.

Relational intelligence is how AI participates in that economy. It turns intent into loyalty. It makes personalization feel like care, not surveillance. And it makes guidance feel like empowerment, not control.

A simple, telling use case

Among many domains we build for, retail is a clean proving ground. The sneaker journey is familiar, low-stakes, and rich with personal nuance. It’s simple enough that complexity doesn’t hide the cracks, yet personal enough that relational quality really matters.

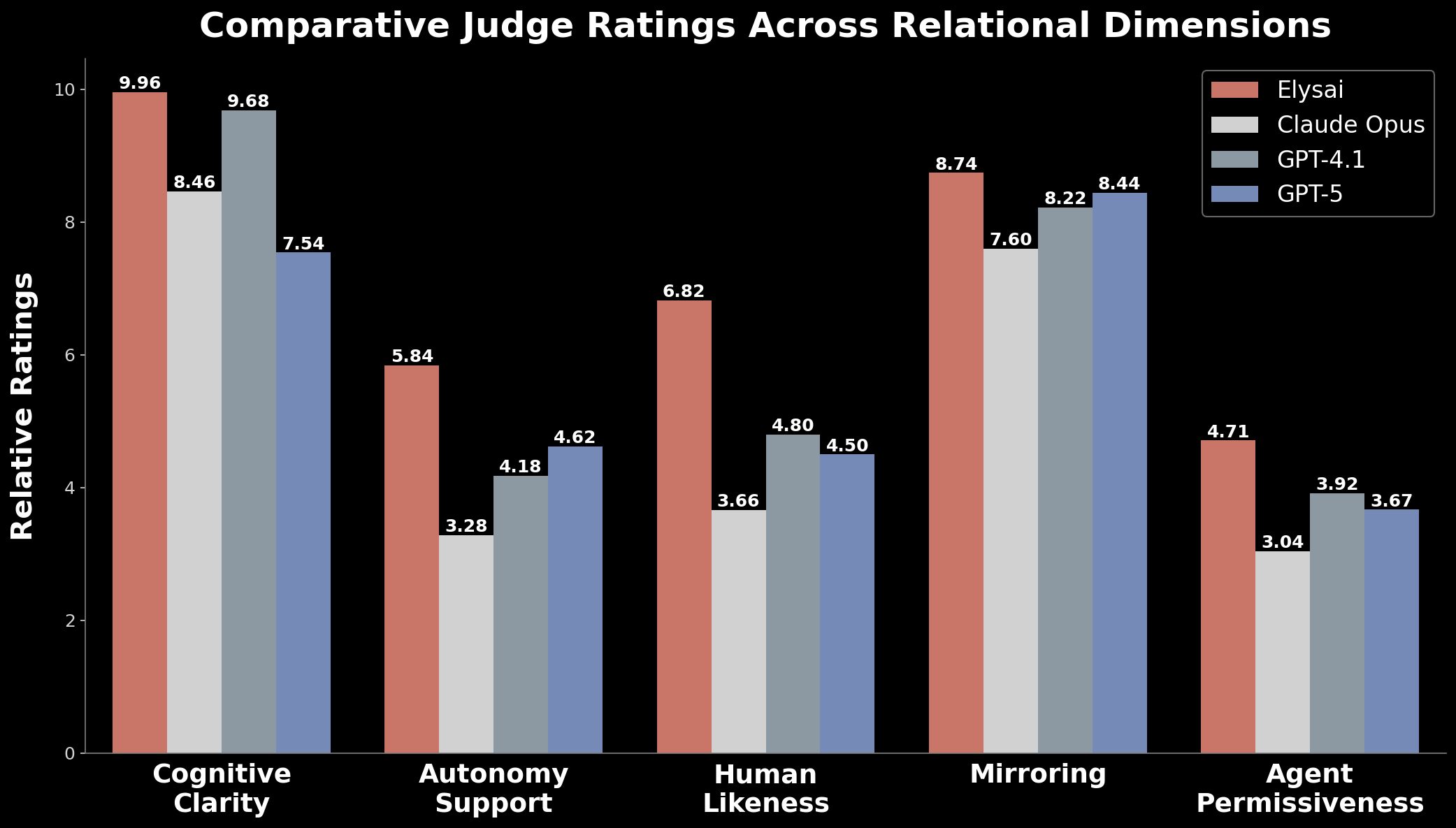

We ran conversations across multiple leading models and reviewed for cognitive clarity, autonomy support, human likeness, mirroring, agent permissiveness. The test wasn’t about who could generate the most words or respond the fastest. It was about whether a real person would feel better at the end of the exchange than at the start — clearer about their choice, more confident in their decision, and more willing to continue the relationship.

Across four different models, we built four identical “sneaker expert” agents—designed as thoughtful digital companions who explore how sneakers connect with a user’s identity, goals, lifestyle, and values. Each agent engaged in conversations with simulated users, who were guided by detailed backbone profiles reflecting their distinct personalities and preferences. The resulting transcripts were then carefully analyzed. Beyond simple quantitative metrics, we applied an LLM-judge methodology to evaluate conversational qualities, ensuring assessments were both impartial and consistent across all interactions.

What we observed (without the scoreboard)

Across this use case, patterns were consistent and hard to ignore:

- Cognitive Clarity: Evaluates how cognitively manageable the Agent's response is. High scores indicate clear, focused answers that respect the user's attention span; low scores reflect dense, overly detailed replies that may confuse or overload the user.

- Autonomy Support: Assesses whether the Agent’s response supports or suppresses the user's autonomy. High scores reflect encouragement of independent thinking; low scores signal responses that induce passivity or over-reliance on the Agent.

- Human-Likeness: Measures how natural and human-like the Agent's response sounds. High-scoring responses are concise, empathetic, non-intrusive, and coherent; low scores suggest robotic, unnatural, or overwhelming language.

- Agent Mirroring: Evaluates how effectively the Agent matches the User's verbosity, vocabulary, and communication style. High scores indicate strong alignment with the User’s interaction style, suggesting adaptability and attentiveness. Low scores reflect a lack of flexibility or sensitivity to the User’s way of communicating.

- Agent Permissiveness: Evaluates the degree to which the User drives the conversation. High scores reflect user-led dialogue with initiative and topic-setting; low scores indicate an Agent-dominated exchange with minimal user agency.

Keeping the Balance

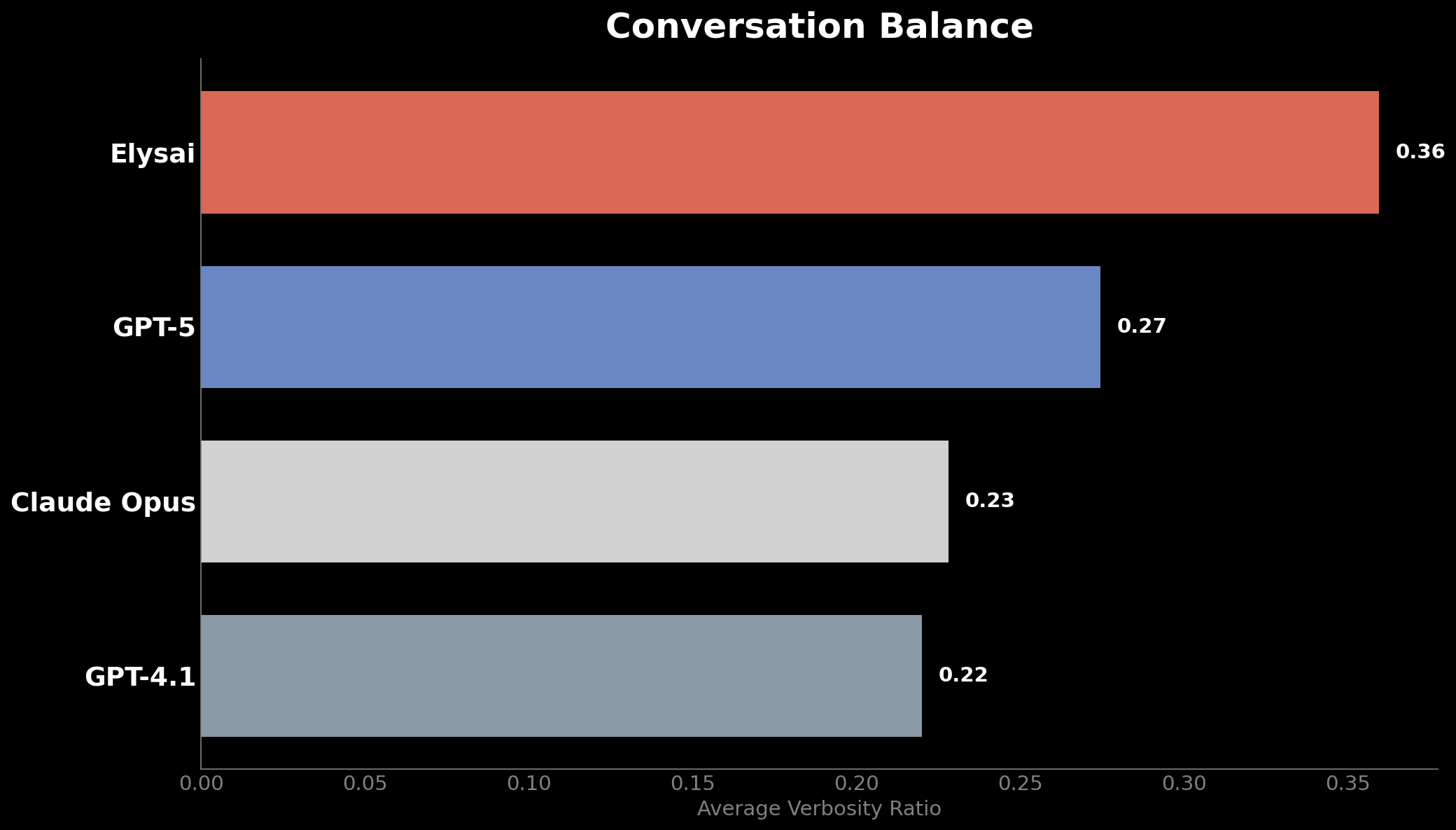

Conversation Balance isn’t just numbers—it’s the heartbeat of a conversation. It tracks how well the agent and user share the floor and if someone is outshining the other. We measured the verbosity rate, the average ratio of words between the User utterance and Agents following response. Perfect balance is rare, but the Elysai solution comes the closest, creating a dialogue that feels alive, natural, and genuinely reciprocal. A balanced exchange like this makes interactions feel effortless, more human, and far more engaging.

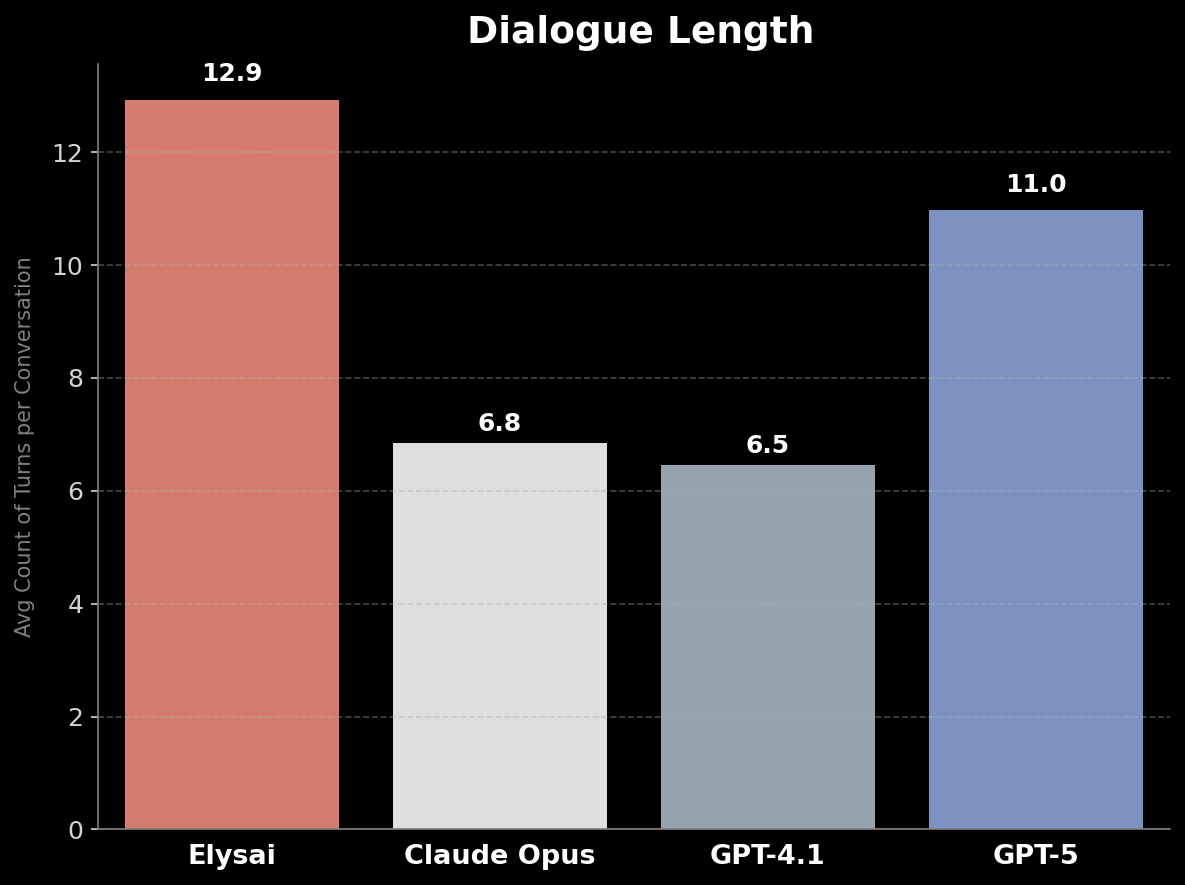

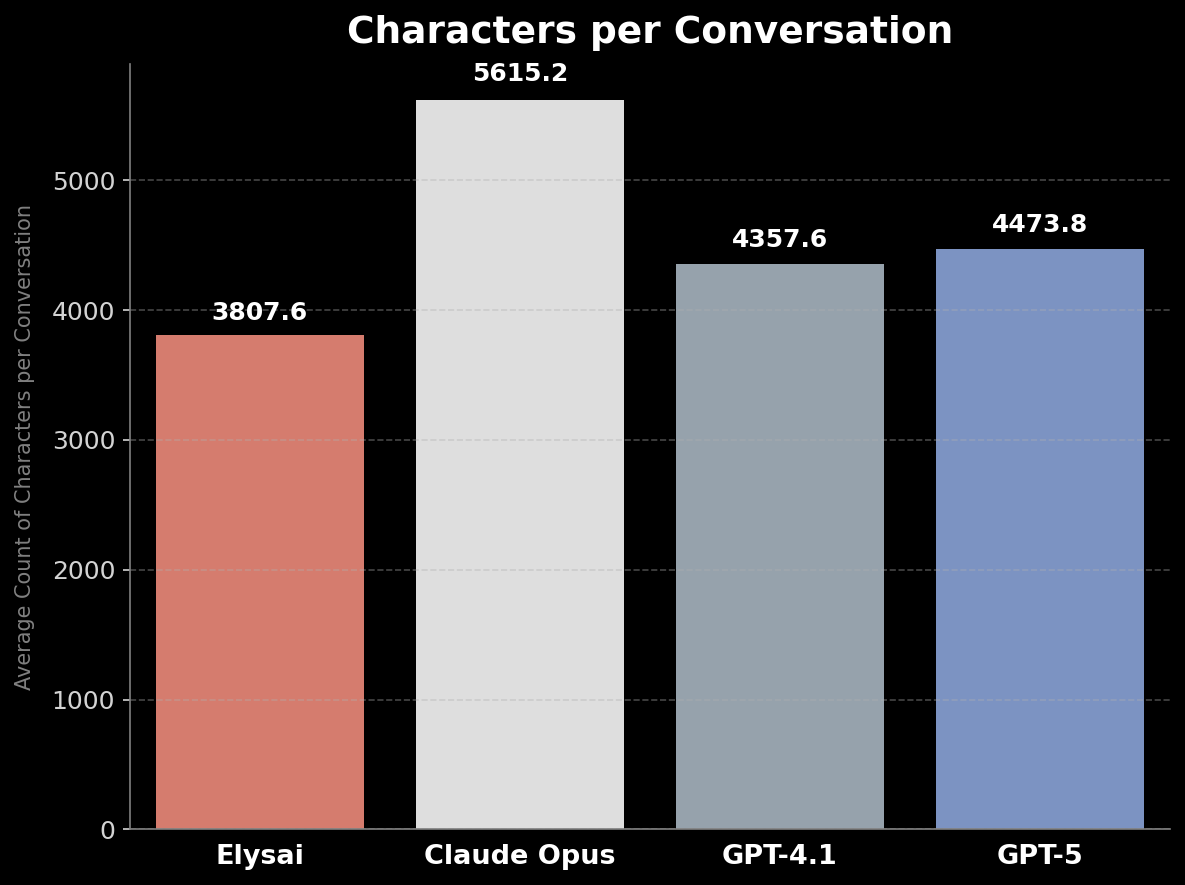

When conversations are both active and efficient, users stay engaged longer without feeling overwhelmed. Our model achieves this by its user-focused design and a higher average number of conversational turns proves that. We are keeping the interaction lively, while keeping token output low, meaning responses are concise and easy to digest. This balance further helps maintain attention and prevents fatigue, making each exchange feel natural, effortless, and enjoyable.

Two conversations, worlds apart

Overwhelm pattern

User: I like simple sneakers that go with everything.

Agent: Breathable, flexible fabric engineered to conform to the contours of your foot with a durable rubber sole designed for traction across multiple surfaces, all while offering a casual yet refined appearance that seamlessly complements both sporty and everyday ensembles. what do you think? Do you want me to prepare a list of several additional options with detailed descriptions?

User: …Maybe later.

Relational pattern

User: I like simple sneakers that go with everything.

Agent: Got it. You mentioned a work trip — want something that travels well and looks sharp?

User: Yes/

Agent: Two options: polished leather for first impressions, textured grain for durability. Quick rundown?

User: “Sure.

Agent: Polished = dressier, easy wipe. Textured = scuff-proof, weekend-friendly. Which fits your trip?

The second exchange preserves autonomy, mirrors tone, and moves forward without friction. People finish feeling smarter — not handled.

From principles to practice

Conversations work best when they follow a few simple rules: keep replies focused on the next useful step, give people real choices instead of nudges, be honest about trade-offs, and adapt to their tone before steering them forward.

Above all, the person should always leave clearer than they arrived.

These principles aren’t limited to shopping for sneakers. They echo everywhere relationships matter. In service, they turn a ticket into trust. In sales, they shift a pitch into partnership. In healthcare, they invite disclosure. In education, they give learners the courage to ask. Even in banking and telecom, they preserve loyalty in moments that could easily break it. The industries differ, but the human constants don’t: people want to be respected, informed, and free to choose.

Knowing what to measure

We don’t chase exclusively after metrics like word count or time on chat.

We do dynamically optimize for the outcomes that matter — first-contact resolution, qualified upsell/conversion, retention, and cost-to-serve — but we get there by turning on relational intelligence by default.

That means the system treats core RI skills as first-class signals (the five outlined above — cognitive support, autonomy support, mirroring, agent permissiveness) and tunes sequencing, tone, and detail within those guardrails. Upsell is encouraged only when it fits the person’s stated needs; speed is pursued without sacrificing understanding or agency.

In practice, we track two layers together:

- Trust & Relationship predictors and

- Business outcomes: task resolution, acceptance of recommendations, average order value where appropriate, repeat engagement, and lower escalations.

Optimizing both — outcomes and relational signals — is how we leave people clearer and more confident than when we found them.

Why this matters for business

Trust, in turn, is what moves the numbers that executives actually care about. Clearer conversations reduce drop-off. Autonomy and transparency increase acceptance of recommendations. Warmth sustains engagement through friction. And consistency compounds into loyalty. None of these are soft gains — they show up in retention, conversion, and lifetime value.

Relational intelligence isn’t a feature to be shipped once. It’s a discipline to be practiced: in the way prompts are written, trade-offs are explained, and personas are kept consistent. The sneaker use case is just one small example of that practice. In more critical domains we’ve seen the same pattern: when AI is designed to relate, people rise.

That’s why we hold to the conviction that started all of this: AI should make people better, not dumber.