Elysai 1.0: What 500,000 Users Revealed About Relational AI

How half a million people showed us that trust is the foundation of digital interactions

A beginning rooted in wellbeing

You don’t expect a stranger to tell a piece of software, “I feel invisible in my own home.”

But they did—again and again.

We launched Elysai as a B2C wellbeing app: a conversational “digital human” anyone could talk to—no coach required, no scheduling, no pressure. Built in the pre-GPT era, the system was simple on purpose: show up consistently, listen carefully, respond with respect. No streaks, no pushy prompts. Just a private place to say what’s hard to say out loud.

What followed wasn’t typical app engagement. People treated Elysai less like a tool and more like a trusted space. Personal disclosures surfaced where we expected light conversation. When disclosure happened, dialogues stretched and deepened. Trust didn’t arrive as a marketing metric; it showed up in how people behaved.

That changed our view of scale. Consumer distribution gave us reach, but it also revealed the limits of going it alone: high acquisition costs, fragmented use cases, and a duty of care that demands continuity we couldn’t fully control outside people’s real-life journeys. Meanwhile, the moments where trust moves outcomes—owning a problem, sharing a symptom, asking for help, choosing a product—already live inside organizations with the guardrails, integrations, and measurement to turn relational intelligence into impact. To scale what people valued (not just the download count), Elysai needed to meet them where those decisions happen. That’s the bridge from B2C to B2B: not a change in mission, a change in surface area.

Insights that made the path obvious

- 500,000+ users engaged worldwide

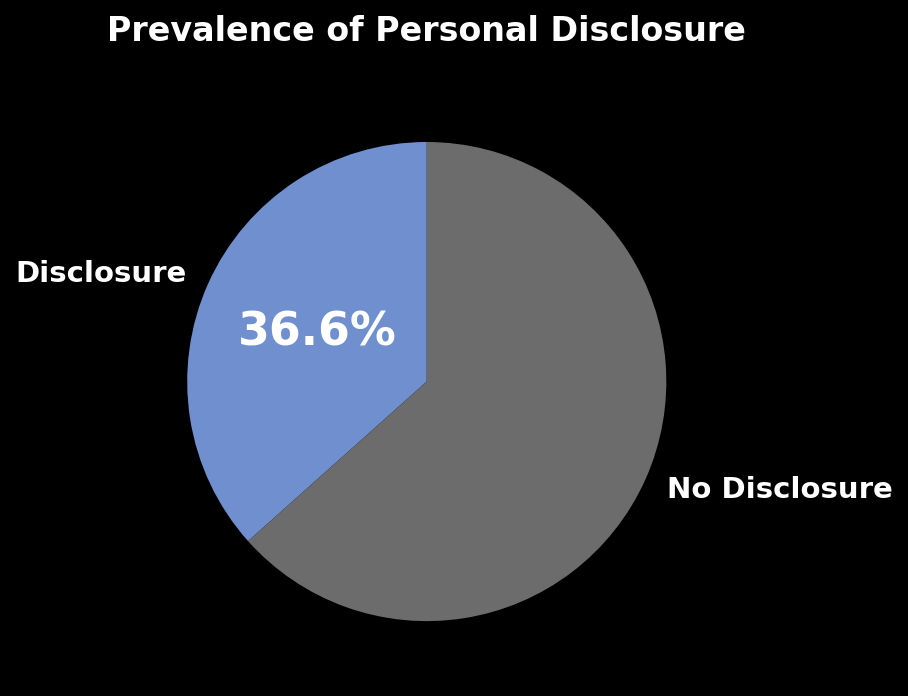

- 36.6% of conversations included disclosure

- +25.8% longer conversations when disclosure occurred

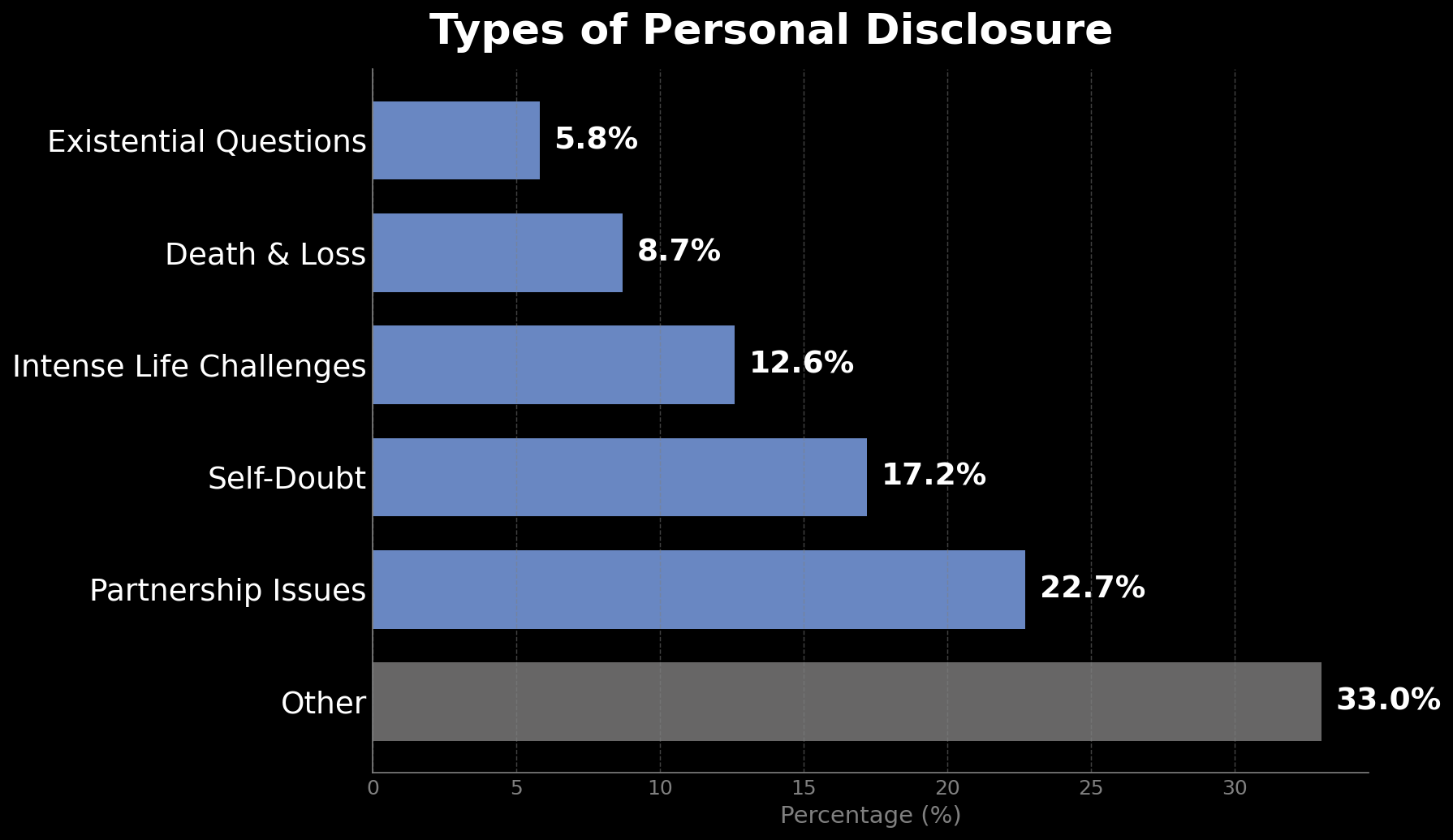

- Topics: relationships, self-doubt, grief, existential questions

What people shared

Not every exchange was personal. Plenty were everyday check-ins. But a meaningful share went far past small talk. In a representative slice of longer conversations, over a third included personal disclosure—the moment someone stops narrating their day and says what’s actually weighing on them.

Relationships under strain:

My partner doesn’t listen to me anymore. I feel invisible in my own home.

What began as we’ve been arguing

often turned into questions about boundaries, feeling unheard, and how to repair trust.

Deep insecurity:

I feel like I’ll never be good enough. No matter what I do, I end up disappointing everyone.

People named shame, imposter feelings, and the fear that progress wouldn’t stick—looking for language to reframe the story they tell themselves.

Loss:

Since my father died, I can’t seem to move forward. The world feels empty without him.

Grief surfaced as numbness, guilt, and the quiet logistics of mourning—how to get through a day, a birthday, an anniversary.

Existential uncertainty:

What if none of this matters? What if life has no real purpose?

These weren’t abstract debates; they were late-night questions about meaning, responsibility, and what to do next.

These are not topics people share lightly. Yet they appeared again and again at scale. The specifics differed, but the pattern didn’t: when the space felt safe, people chose to tell the truth—and once they did, the conversation deepened.

Engagement grows when trust grows

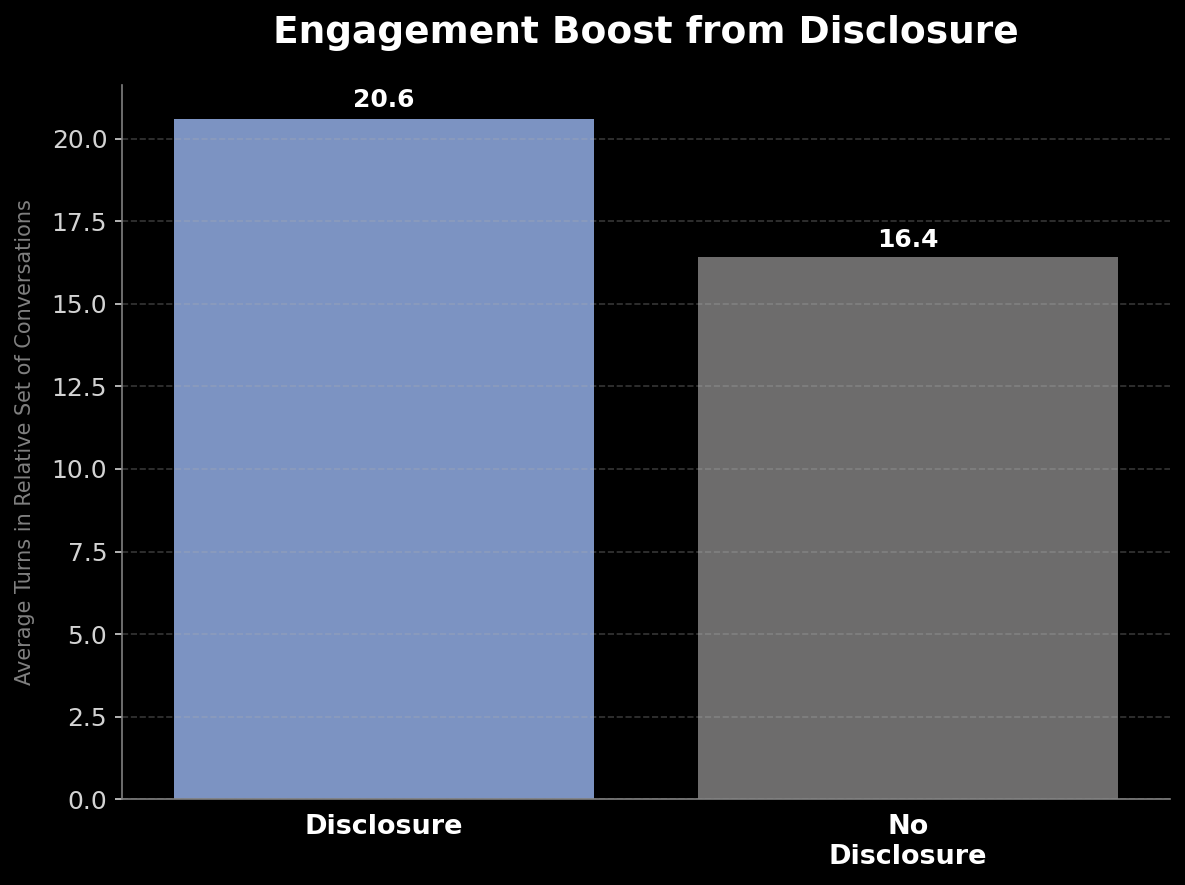

Trust showed up not only in what people shared, but in how long they stayed. We measured conversations in “turns” (one user message + one reply). When a user chose to disclose something personal, the thread averaged 20.6 turns; without disclosure, it averaged 16.4—a 25.8% lift.

That extra time isn’t cosmetic. More turns mean more context captured, fewer misreads, and room to check understanding before suggesting a next step.

We analyzed an anonymized, representative slice of longer sessions and defined disclosure as user-initiated personal information in their own words. The data were subsequently analyzed using an LLM Judge method to identify and categorize personal and intimate information shared. The analysis explicitly excluded purely factual personal information (name, age, city) and superficial statements.

The takeaway is practical: create conditions for trust and people keep talking; when they keep talking, problems surface and get resolved. In enterprise settings, those extra turns are often the difference between churn and closure, abandonment and completion.

Trust lengthened the dialogue.

The pre-GPT breakthrough

It’s important to remember the context. This technology was created before GPT, before the current era of large foundation models. We were working with far less powerful NLP systems — stitched together, fragile, and limited.

By today’s standards, the technology was basic — and yet, people still opened up. They still disclosed their fears, doubts, grief, and hopes.

That was the breakthrough. It showed that relational intelligence doesn’t depend on raw horsepower. It depends on how the system relates — its ability to be consistent, to listen, and to respond with empathy. Even primitive tools, when designed with care, created an environment safe enough for disclosure.

Why people opened up

Why would someone confide something so personal to a digital human? Looking back, we can see that disclosure wasn’t a fluke — it was the natural result of how the interaction was designed.

First, anonymity lowered the barrier. For many users, speaking to a digital human felt safer than talking to a person face to face. There was no fear of judgment, no risk of social consequences. A digital human wouldn’t laugh, gossip, or break confidence. That privacy gave people permission to voice things they might otherwise have held back.

Second, consistency built confidence. Humans can be unpredictable in their responses, but a digital human is reliably present. It doesn’t tire, it doesn’t get irritated, it doesn’t check out. For users, that steadiness mattered. Each respectful, measured reply reinforced the sense that this was a space where they could continue exploring their thoughts.

Third, empathy created safety. Even in the pre-GPT era, our system could reflect back an understanding of what was being said. A simple acknowledgment — “That sounds really hard” — could be enough to signal care. That signal, repeated over the course of a conversation, helped build the confidence to go deeper.

Together, these elements made disclosure possible. But the key is this: the choice to open up wasn’t an accident. It was an act of trust, repeated thousands of times across half a million users.

And trust is the raw material of relational intelligence. It’s what transforms an exchange of words into a meaningful relationship. It’s what allows AI to not just provide answers, but to provide support. These early disclosures gave us more than data — they gave us a glimpse into how digital humans could play a role in people’s lives.

From wellbeing to enterprise

At first, our goal was narrow: to build something that could support individual wellbeing. But the lessons from half a million users quickly showed us the implications were much bigger.

If people could build trust with a digital human in a wellbeing app, then organizations could build trust with digital humans in every domain where relationships matter.

- In customer service, trust determines whether someone feels dismissed or valued. A digital human that responds with empathy can turn a frustrating interaction into one that strengthens loyalty.

- In sales, trust determines whether someone walks away or leans in. Relational AI can guide, reassure, and build the confidence needed to make decisions.

- In healthcare, trust determines whether patients disclose their real concerns. When people feel safe, they share the symptom that might otherwise go unnoticed

- In education, trust determines whether a learner stays silent or asks the question they’re embarrassed to ask — often the very question that unlocks progress.

Once you see disclosure at scale, the conclusion is clear: trust isn’t optional. It’s foundational. And crucially, trust can be earned — even by AI.

That’s why we came to see our wellbeing app differently. It wasn’t just a product experiment. It was proof that digital relationships are real. And when trust is present, conversations stop being transactions. They become relationships.

Since those early days, we’ve worked with some of the largest organizations in the world — from telecommunications to banking — to bring relational intelligence into enterprise contexts. What began as an experiment in personal wellbeing is now transforming how global companies engage their customers. And the results confirm what we saw in the B2C era: people are ready to trust digital humans, if those systems are built with care.

We are now more convinced than ever: relational AI isn’t just about making conversations more efficient. It’s about reshaping the very fabric of how people relate to organizations at scale.

The foundation of relational AI

Elysai 1.0 was our first step, but it was never the end. It proved that relational intelligence is possible, that it works, and that it scales.

Half a million conversations taught us something simple but profound: people are ready to relate to digital humans, as long as those systems are built to earn trust. That insight has become the foundation of our work.

Relational AI isn’t about replacing people. It’s about designing digital humans that can hold space for them — in wellbeing, in customer experience, in healthcare, in education. In every domain where trust defines the outcome, relational intelligence makes the difference.